Opened 6 years ago

Last modified 5 years ago

#3771 new defect

Run tests on Travis

| Reported by: | pmav99 | Owned by: | |

|---|---|---|---|

| Priority: | normal | Milestone: | 7.8.3 |

| Component: | Tests | Version: | svn-trunk |

| Keywords: | Cc: | ||

| CPU: | Unspecified | Platform: | Unspecified |

Description

At the moment, Travis only builds GRASS without running any tests. The tests should be running on Travis for each commit and there should be notifications on the list whenever a commit breaks the tests.

Attachments (1)

Change History (26)

comment:1 by , 6 years ago

follow-up: 4 comment:3 by , 6 years ago

Running tests on CI doesn't really make sense if you have failing tests: http://fatra.cnr.ncsu.edu/grassgistests/summary_report/nc/index.html

So the next step would be to make the tests pass.

- Ideally, the failing tests should be fixed.

- If that is not possible the tests should be marked to be skipped

- If that is not possible then I would suggest removing them for now + opening an issue to re-add them when they are fixed (one issue per removed test)

https://docs.python.org/2/library/unittest.html#skipping-tests-and-expected-failures

follow-up: 5 comment:4 by , 6 years ago

Replying to pmav99:

Running tests on CI doesn't really make sense if you have failing tests: http://fatra.cnr.ncsu.edu/grassgistests/summary_report/nc/index.html

To my knowledge, most of the tests that are currently failing, started failing due to fundamental changes from the introduction of Python 3, e.g. everything related to bytes vs. strings...

So we would have to sort out those from tests that are failing for other reasons...

So the next step would be to make the tests pass.

In general +1, but again, lets try to distinguish Pythen 3 related test failure from all others... Currently, somehow, most failing tests tell us how far we are from Python 3 (and 2) support...

comment:5 by , 6 years ago

Replying to sbl:

To my knowledge, most of the tests that are currently failing, started failing due to fundamental changes from the introduction of Python 3, e.g. everything related to bytes vs. strings...

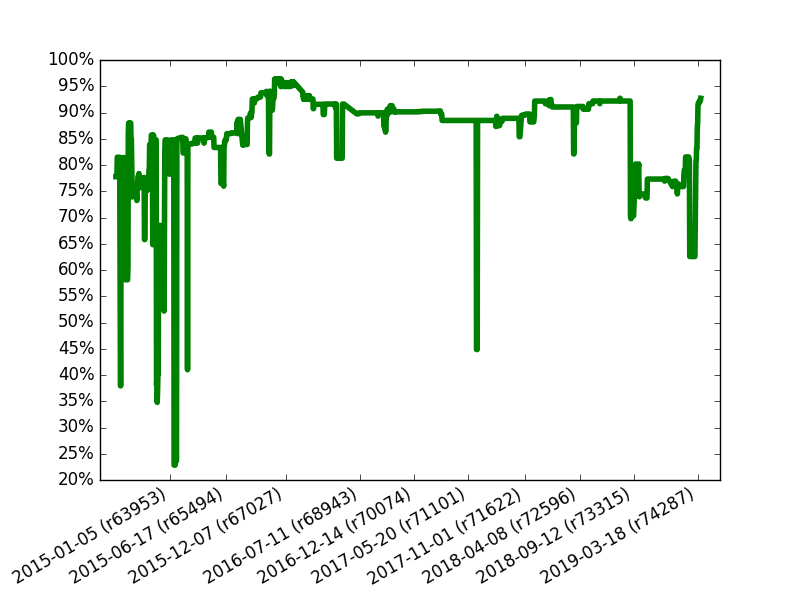

If you scroll down far enough here there is this graph:

Last September, indeed, there was a spike of failing tests but still ~10% of the tests were practically always failing.

That being said, supporting both Python 2 and 3 is a requirement encountered primarily in libraries, not applications. Applications usually only need to support a single Python version. GRASS does provide an API, but, at least from where I stand, it is primarily an application. Nevertheless, the decision that GRASS 7.8 will support both Python 2 and 3 has been made and unless it is revoked, the code needs to support both versions.

Nowadays, there is a great deal of experience in the Python community WRT both porting code from Python 2 to 3 and to supporting both Python versions from a single codebase. The latter usually involves using projects like https://pypi.org/project/six/ and/or https://python-future.org/. Using them is of course not mandatory. Most projects only need a small part of their functionality, so they can often get away with just a [{{compat.py}}} module and appropriate imports. On projects of significant complexity though, it is often a good idea to use them.

Long story short, it is indeed more difficult to maintain a cross-version compatible codebase but with some concessions it is an achievable goal.

In my humble view, the fact that the tests are failing doesn't have to do with with Python 2 or Python 3 or even with the cross-version compatibility. There are two rules that are pretty much unanimously accepted in the software development industry:

- Don't break the build.

- You break the build, you fix it.

(obviously, running the tests is considered part of the build)

The afore-mentioned graph shows that GRASS devs don't run the tests as part of their development routine. I argue that this needs to change. Arguably, no one wants to wait for a long running testsuite to finish. And that's absolutely natural. Honestly speaking, no one should have to wait. That's what CI is for. To build the code and run the tests.

The gist of the issue here is supporting GRASS' growth. Automated builds and improved testing procedures are a prerequisite of this goal. An important checkpoint in this effort is achieving a green build.

follow-up: 21 comment:6 by , 6 years ago

In principle, fully agreed on all points regarding tests.

Just to add some history. Python 3 support was to a significant amount worked on in a GSoC project in 2018 (https://lists.osgeo.org/pipermail/soc/2018-August/004186.html), although that was a big step forward, open issues remained. And after that project finished, a decision had to be made to either merge the unfinished code (leading to failing tests) or wait and risk that merging the code later would become harder and harder. Decision was made for the latter (see r73229).

Comparing test results before and after this commit (2018-09-02 vs. 2018-09-03) can give an idea about failing tests because of introduction of Python 3 vs. other failures:

- http://fatra.cnr.ncsu.edu/grassgistests/reports_for_date-2018-09-02-07-00/report_for_nc_basic_spm_grass7_nc/testsuites.html

- http://fatra.cnr.ncsu.edu/grassgistests/reports_for_date-2018-09-03-07-00/report_for_empty_xy_all/testsuites.html

So, making tests pass is a matter of resources (time) I guess, and I am sure contributions are more than welcome. The question is, what is the best way forward. Suggestions fromm my side would be to:

- Make the test suite and test coverage a topic for a community sprint. If those who know the test suite well could help others (like me) to improve their procedures that would be really cool and appreciated.

- Prioritize move to git if that helps people like you, Panos, to contribute and help with Python 3 support (which is supposed to be a focus for the 7.8 release) Really good to see your recent activity here, BTW!

- Crack down on the other 10% of failing test (fix or deactivate temporarily if necessary as you suggest)

follow-up: 22 comment:7 by , 6 years ago

Another relevant issue are test datasets, it seems.

Some maps used in tests are not available in all sample data used for testing (or with different names. If I remeber correctly, some effort has been put on this relatively newly, as e.g. maps are copied to the correct name used in the testsuite, before tests are run.

For example, in db/db.copy/testsuite/test_dbcopy.py the map "zipcodes" is used, which is available in nc_basic, while a suitable map in nc_spm_08 is named zipcodes_wake, the demolocation does not have a comparable map. The documentation suggests to use nc_spm dataset for testing (https://grass.osgeo.org/grass76/manuals/libpython/gunittest_testing.html).

Should tests (where necessary) be updated to work with nc_spm? (or should input map names be chocen based on name of the location a test is ran in)?

I also had a look at how to skip tests (e.g. if maps are missing in the location used to run tests). But could not figure out haow to do that. Hints are welcome!

comment:8 by , 6 years ago

@sbl Skipping tests can be with this. I haven't really tested how it works with gunittest, but my guess is that it should work out of the box.

follow-up: 10 comment:9 by , 6 years ago

Some updates after a first attempt at fixing failling tests. The tests test_v_in_pdal_basic and test_v_in_pdal_filter should be probably moved from ./vector/v.in.lidar to ./vector/v.in.pdal However, tests should only be executed if the module is compiled and avaialable (does not seem to be the case for v.in.pdal). The latter is implemented in r74287.

Tests with issues with input data, where values changed (possibly also because of changed input data):

- ./vector/v.in.lidar – mask_test

- ./vector/v.univar – v_univar_test

- ./raster/r.basins.fill/testsuite/testrbf.py

- ./vector/v.surf.rst – test_vsurfrst (elev_points is 3D)

- ./lib/python/gunittest – test_assertions_vect (column GLEVEL in map schools is not numeric)

- ./imagery/i.vi/testsuite/test_vi.py

Bugs (suffix gets messed up):

- ./raster/r.horizon/testsuite/test_r_horizon.py

Failing tests due to changes in functions:

- ./lib/python/temporal – unittests_temporal_raster_conditionals_complement_else

Test with various issues failures (many of the temporal tests should be fixed by making functions accept unicode in r74285 and r74286)

- ./scripts/g.extension – test_addons_modules

- ./lib/python/pygrass/raster/testsuite/test_history.py

- ./lib/python/pygrass/modules/testsuite/test_import_isolation.py

- ./temporal/t.vect.algebra/testsuite/test_vector_algebra.py

- ./temporal/t.rast3d.algebra/testsuite/test_raster3d_algebra.py

- ./temporal/t.rast.accumulate/testsuite/test_accumulation.py

- ./temporal/t.rast.algebra/testsuite/test_raster_algebra_granularity.py

- ./temporal/t.rast.algebra/testsuite/test_raster_algebra.py

- ./temporal/t.rast.aggregate/testsuite/test_aggregation_absolute.py

- ./temporal/t.rast.accdetect/testsuite/test.t.rast.accdetect.sh

- ./temporal/t.rast.accdetect/testsuite/test_simple.py

- ./temporal/t.rast.accdetect/testsuite/test.t.rast.accdetect.reverse.sh

- ./lib/python/temporal/testsuite/unittests_temporal_raster_algebra_equal_ts.py

Tests where encoding is a problem:

- ./temporal/t.info

- ./vector/v.what – test_vwhat_layers does not fail on my system, maybe related to the locals on the server that runs the tests...

Tests where expected output changed from string to unicode

- ./lib/python/temporal/testsuite/test_temporal_doctests.py

- ./lib/python/pygrass/vector/testsuite/test_pygrass_vector_doctests.py

- ./lib/python/pygrass/raster/testsuite/test_pygrass_raster_doctests.py

comment:10 by , 6 years ago

@sbl Great work!

Replying to sbl:

The tests test_v_in_pdal_basic and test_v_in_pdal_filter should be probably moved from ./vector/v.in.lidar to ./vector/v.in.pdal However, tests should only be executed if the module is compiled and avaialable (does not seem to be the case for v.in.pdal). The latter is implemented in r74287.

You are right that the test_v_in_pdal_* should be moved to ./vector/v.in.pdal/. The cause for this was my mistake in #3792 (last two lines of the suggested fix).

WRT skipping the tests, I believe that you can also apply the skipIf decorator directly to the Testsuite. That being said, if possible, building on Travis should enable and test optional configurations too.

follow-up: 12 comment:11 by , 6 years ago

I think I can fix the g.extension issue tonight.

However, for the tests failing because of issues with the input data, it would be really useful to get access to the

nc_spm_full_v2alpha

location.

Unfortunately, I have not found it for download...

comment:12 by , 6 years ago

Replying to sbl:

I think I can fix the g.extension issue tonight.

However, for the tests failing because of issues with the input data, it would be really useful to get access to the

nc_spm_full_v2alphalocation.

Unfortunately, I have not found it for download...

Dataset now packaged and published by wenzeslaus:

by , 6 years ago

| Attachment: | testsuite.patch added |

|---|

follow-up: 14 comment:13 by , 6 years ago

Thank you @wenzeslaus

@everyone I used the attached patch to get the testsuite runner to use the new dataset.

comment:14 by , 6 years ago

comment:15 by , 6 years ago

Note 1: Some tests require certain compilation options to be enabled (e.g. pdal, liblas etc). If support is missing the tests are failing. Ideally these tests should be skipped instead.

Note 2: I did run the tests on an ubuntu VM where all the options were configured (except opencl and dwg). I did get several more failures than the ones reported here. More specifically on my VM:

(SVN revision 74319) Executed 233 test files in 0:29:16.353563. From them 194 files (83%) were successful and 39 files (17%) failed.

For comparison, the latest results from the test server are:

(SVN revision 74304) Executed 233 test files in 0:36:57.428963. From them 216 files (93%) were successful and 17 files (7%) failed.

I guess that this difference implies that the server is configured in a specific way which needs to be documented before we can replicate it on Travis.

follow-up: 20 comment:16 by , 6 years ago

At time the "fatra" server no longer runs, it is frozen at date

2019-03-26 03:01:54 74304 nc_spm_full_v2alpha 93% 98%

@vaclav: could you please check? thanks

http://fatra.cnr.ncsu.edu/grassgistests/summary_report/nc/index.html

follow-up: 19 comment:18 by , 6 years ago

Now multirunner also works with Python 3 and tests for P2 and P3 are more or less even...

However, a new issue pops up:

NameError: global name '_' is not defined

Also, in Py3 I had to deactivate the following tests as the cause multirunner to freeze...

lib/python/pygrass/modules/interface/testsuite/test_pygrass_modules_interface_doctests.py lib/python/temporal/testsuite/test_temporal_doctests.py temporal/t.vect.algebra/testsuite/test_vector_algebra.py

comment:19 by , 6 years ago

Replying to sbl:

Now multirunner also works with Python 3 and tests for P2 and P3 are more or less even...

However, a new issue pops up:

NameError: global name '_' is not defined

That's related to #3772. Do you remember which tests are the problematic ones?

comment:20 by , 6 years ago

Replying to neteler:

At time the "fatra" server no longer runs, it is frozen at date

2019-03-26 03:01:54 74304 nc_spm_full_v2alpha 93% 98%@vaclav: could you please check? thanks

http://fatra.cnr.ncsu.edu/grassgistests/summary_report/nc/index.html

Seems like a minor issue so far. Tests should still run locally (if you try). I should know more tomorrow (debug message added).

comment:21 by , 6 years ago

Replying to sbl:

- Crack down on the other 10% of failing test (fix or deactivate temporarily if necessary as you suggest)

You don't need 100% passing to run it on CI reasonably. That's the ideal state, but we can start with setting a threshold to what you consider OK. The idea is already there. Notice the colors of the percentages. What you need to add is mechanism for the main script to fail and not fail with a given success percentage value. This would catch serious problems, but that will be still quite a progress. So far it was mostly me checking the fatra server website and reporting it to mailing list (or, I think, directly to the committer in one recent case).

comment:22 by , 6 years ago

Replying to sbl:

Another relevant issue are test datasets, it seems.

As for (older) full and (newer) basic version of NC SPM, I think nc_spm_full_v2alpha solves it for now (not ideal, but works). Making nc_spm_full_v2alpha work is a good goal for the Travis runs now.

However, please also note the idea of different sample datasets, i.e. other than NC SPM (old/full or new/basic or full v2). Please see: GRASS-dev: Testsuite: script for easier use of test framework https://lists.osgeo.org/pipermail/grass-dev/2019-January/091011.html

There are different approaches how to handle the different datasets in tests and by the runner, but idea is 1) to make easy to write very specific tests, 2) to test in different conditions like latlon GRASS Location, and 3) to have tests which run in any GRASS Location and/or without any data (so you can e.g. do make test without getting 100 MB of sample data).

comment:23 by , 6 years ago

For the record: after Annas sweeping fixes for Python3 tests, the testsuite on my local system runs 97% of the test files and 98% of the tests successfully with Python3.

The remaining failing tests are (for Python3):

- ./gui/wxpython/core/testsuite/toolboxes.sh

- ./raster/r.contour/testsuite/testrc.py

- ./lib/python/temporal/testsuite/unittests_temporal_raster_conditionals_complement_else.py

- ./lib/python/temporal/testsuite/unittests_temporal_raster_algebra_equal_ts.py

- ./lib/python/pygrass/raster/testsuite/test_history.py

However, unfortunately, multirunner does not finish with Python 2 any more.... So, currently, the testsuite supports either Python 2 or Python 3, but not both...

comment:24 by , 6 years ago

For replication:

python lib/python/gunittest/multirunner.py --location nc_spm_full_v2alpha \ --location-type nc --grassbin bin.x86_64-pc-linux-gnu/grass77 --grasssrc ./ \ --grassdata $HOME/Documents/grassdata/

completes with Python 3, but not with Python 2 (Unicode error)

comment:25 by , 5 years ago

| Milestone: | → 7.8.3 |

|---|

Patches would be gratefully accepted!